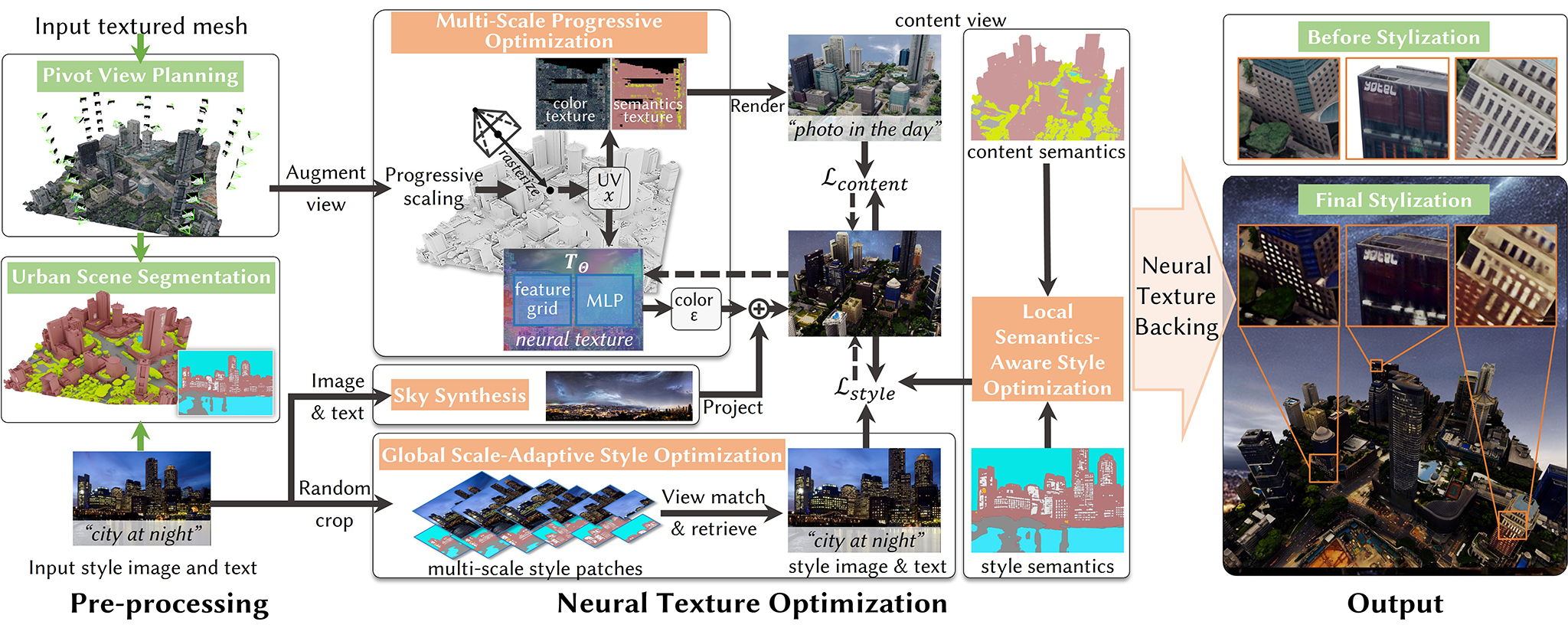

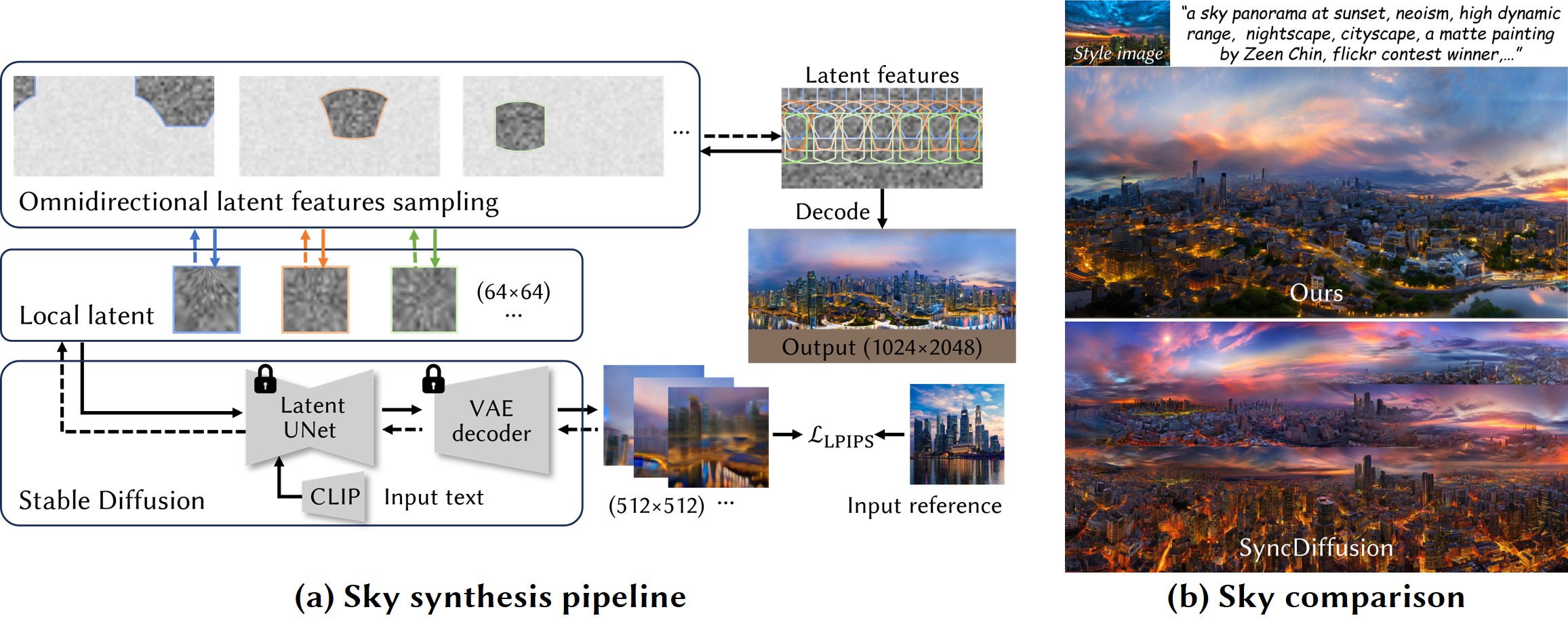

TL;DR: StyleCity synthesizes style-aligned urban texture and 360° sky background, while keeping scene identity intact.

Yingshu Chen, Huajian Huang, Tuan-Anh Vu, Ka Chun Shum,

Sai-Kit Yeung

The Hong Kong University of Science and Technology

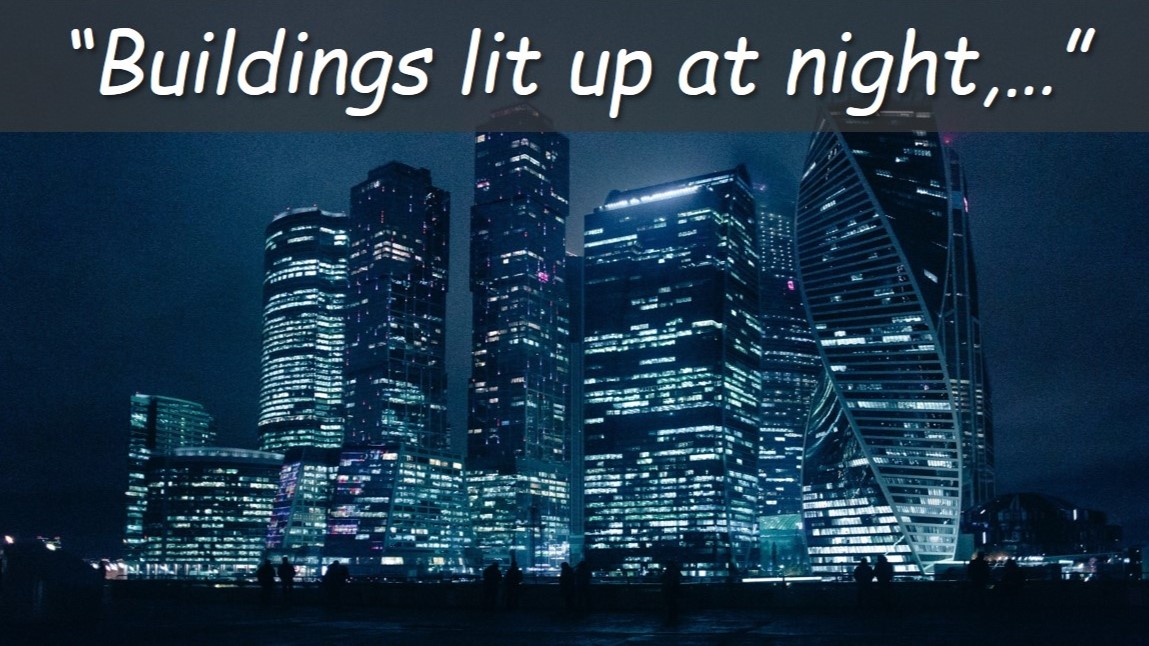

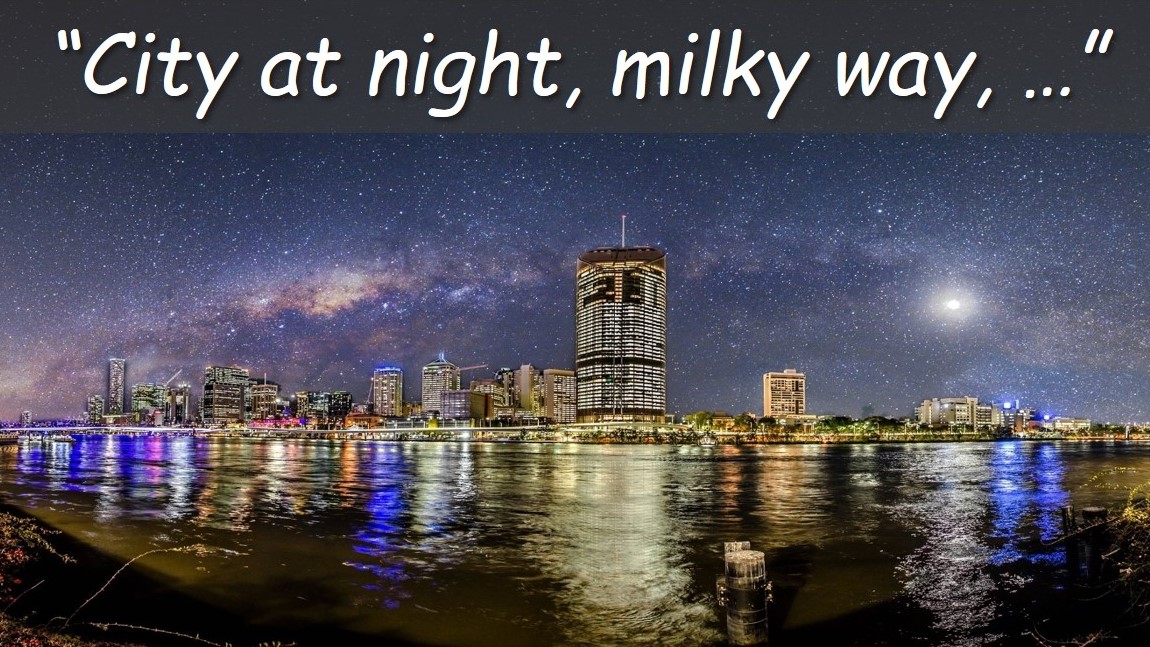

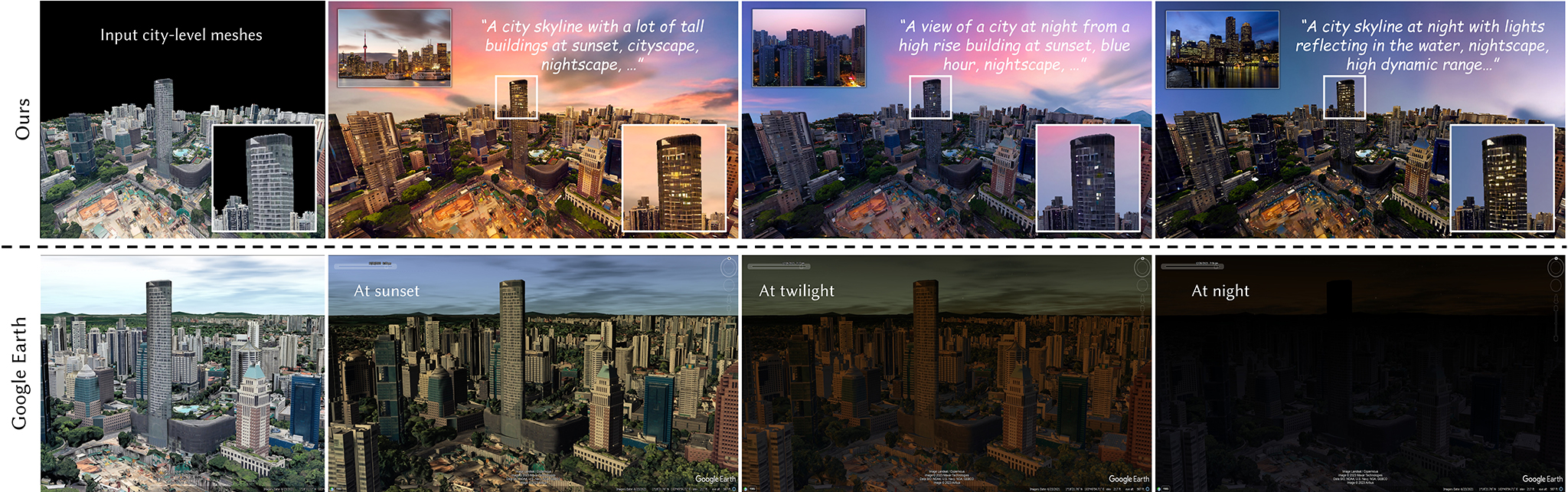

Hallucination of magic times of a day for a city.

Comparisons among instruct-NeRF2NeRF, ARF, StyleMesh and our proposed method. Our results have plausible texture and background and preserve the scene identity in different style transfer cases.

Comparisons with Google Earth.

@inproceedings{chen2024stylecity,

title={StyleCity: Large-Scale 3D Urban Scenes Stylization},

author={Chen, Yingshu and Huang, Huajian and Vu, Tuan-Anh and Shum, Ka Chun and Yeung, Sai-Kit},

booktitle={Proceedings of the European Conference on Computer Vision},

publisher="Springer Nature Switzerland",

pages="395--413",

year={2024}

} Advances in 3D Neural Stylization: A Survey. 2023.

Disentangling Structure and Appearance in ViT Feature Space. TOG 2023.

SyncDiffusion: Coherent Montage via Synchronized Joint Diffusions. NeurIPS 2023.

Time-of-Day Neural Style Transfer

for Architectural Photographs. ICCP 2022.

Deep Photo Style Transfer. CVPR 2017.

Data-driven Hallucination for Different Times of Day from a Single Outdoor Photo. SIGGRAPH ASIA / TOG 2013.

The paper was partially supported by a grant from the Research Grants Council of the Hong Kong Special Administrative Region, China (Project No. HKUST 16202323) and an internal grant from HKUST (R9429).